There is a myth promulgated by both quacks and academics who should know better that medical errors are the third leading cause of death in the United States. You’ll see figures of 250,000 or even 400,000 deaths each year due to medical errors, which would indeed be the third leading cause of death after heart disease (635,000/year) and cancer (598,000/year). When last I discussed this issue three years ago, specifically a rather poor study out of The Johns Hopkins that estimated that 250,000 to 400,000 deaths per year are due to medical errors, I pointed out how these figures are vastly inflated and don’t even make any sense on the surface. For one thing, there are only 2.7 million total deaths per year in the US, which would mean that these estimates, if accurate, would translate into 9% to 15% of all deaths being due to medical errors. Those numbers just don’t make sense. It’s even worse than that, though. This particular study looked at hospital-based deaths, of which there are around 715,000 per year, which would imply that these estimates, if accurate, would mean that medical errors cause between 35% and 56% of all in-hospital deaths, numbers that are highly implausible, something that would be obvious if anyone ever bothered to look at the appropriate denominators. Unfortunately, in the three years since its publication, the Makary study has taken on a life of its own, and it’s basically become commonly accepted knowledge that medical errors are the third leading cause of death, even though this estimate is based on highly flawed studies and these numbers are five- to ten-fold greater than the number of people who die in auto collisions every year.

I see this number popping up in the most unexpected places, mentioned matter-of-factly, as though it were truth that everyone accepts:

Medical errors are NOT the third leading cause of death in the US. For that to be true, one-third to one-half of all hospital deaths would have to be due to medical errors.

Damn, that lie just won't die, and even good reporters fall for it. That's why it's so insidious. https://t.co/XtkP2CX2gY

— David Gorski, MD, PhD (@gorskon) February 1, 2019

Yes, Arthur Allen, a writer I’ve admired since his book Vaccine, casually included that factoid in his story.

The attempt to quantify how many deaths are attributable to medical error began in earnest in 2000 with the Institute of Medicine’s To Err Is Human, which estimated that the death rate due to medical error was 44,000 to 96,000, roughly one to two times the death rate from automobiles. At the time, in response to the study, the quality improvement (QI) revolution began. Every hospital began implementing QI initiatives. Indeed, I was co-director of a statewide QI effort for breast cancer patients for three years. Yet, as Mark Hoofnagle points out in the Twitter thread above, the estimates for “death by medicine” keep increasing. They went from 100,000 to 200,000 and now as high as 400,000. On quack websites, the number is even higher. For instance, über-quack Gary Null teamed with Carolyn Dean, Martin Feldman, Debora Rasio, and Dorothy Smith to write a paper “Death by Medicine,” which estimated that the total number of iatrogenic deaths is nearly 800,000 a year, which would be the number one cause of death, if true and nearly one-third of all deaths in the US. Basically, when it comes to these estimates, it seems as though everyone is in a race to see who can blame the most deaths on medical errors.

How did we get here? As Mark Hoofnagle put it:

Here's the history, the "3rd cause" canard comes from a major frameshift on measuring error, and a questionable algorithmic measurement of error that does not actually detect mistakes but "ripples" in the EMR that are *proxies* for error – ICU admissions, major order changes etc.

— Mark Hoofnagle (@MarkHoofnagle) February 1, 2019

Mark was referring to the use of the Institute for Healthcare Improvement’s Global Trigger Tool, which is arguably way too sensitive. Also, as I explained in my deconstruction of the Johns Hopkins paper, the authors conflated unavoidable complications with medical errors, didn’t consider very well whether the deaths were potentially preventable, and extrapolated from small numbers. Many of these studies also used administrative databases, which are primarily designed for insurance billing and thus not very good for other purposes.

So, if the estimates between 200,000 and 400,000 are way too high, what is the real number of deaths that can be attributed to medical error? How would we go about estimating it? As part of that Twitter exchange, Mark pointed me to a recent publication that suggests how. Not surprisingly, its estimates are many-fold lower than the Hopkins study. Also not surprisingly, it got basically no press coverage. The study was published two weeks ago in JAMA Network Open; it’s by Sunshine et al. out of the University of Washington and is entitled “Association of Adverse Effects of Medical Treatment With Mortality in the United States: A Secondary Analysis of the Global Burden of Diseases, Injuries, and Risk Factors Study“.

The first thing you should note is that the study doesn’t just look at medical errors, but rather all adverse events, and their association with patient mortality. That basically means any adverse event, whether it was due to a medical error or not. The study itself is a cohort study using the Global Burden of Diseases, Injuries, and Risk Factors (GBD) study, which uses the GBD database to estimate changes in the rate of death due to adverse events from 1990 to 2016. This database is described thusly in the paper:

The 2016 GBD study is a multinational collaborative project with an aim of providing regular and consistent estimates of health loss worldwide. Methods for GBD 2016 have been reported in full elsewhere. Briefly, data were obtained from deidentified death records from the National Center for Health Statistics; records included information on sex, age, state of residence at time of death, and underlying cause of death. Causes were classified according to the International Classification of Diseases, Ninth Revision (ICD-9), for deaths prior to 1999 and the International Statistical Classification of Diseases and Related Health Problems, Tenth Revision (ICD-10) for subsequent deaths. Each death was categorized as resulting from a single underlying cause. All ICD codes were mapped to the GBD cause list, which is hierarchically organized, mutually exclusive, and collectively exhaustive.

And:

The GBD methodology also accounts for when ill-defined or implausible causes were coded as the underlying cause of death. Plausible underlying causes of death were assigned to each ill-defined or implausible cause of death according to proportions derived in 1 of 3 ways: (1) published literature or expert opinion, (2) regression models, and (3) initial proportions observed among targets.

If you want more detail about the database, the paper in which it was reported is open access, but here’s a bit about the data sources:

The GBD study combines multiple data types to assemble a comprehensive cause of death database. Sources of data included VR and VA data; cancer registries; surveillance data for maternal mortality, injuries, and child death; census and survey data for maternal mortality and injuries; and police records for interpersonal violence and transport injuries. Since GBD 2015, 24 new VA studies and 169 new country-years of VR data at the national level have been added. Six new surveillance country-years, 106 new census or survey country-years, and 528 new cancer-registry country-years were also added.

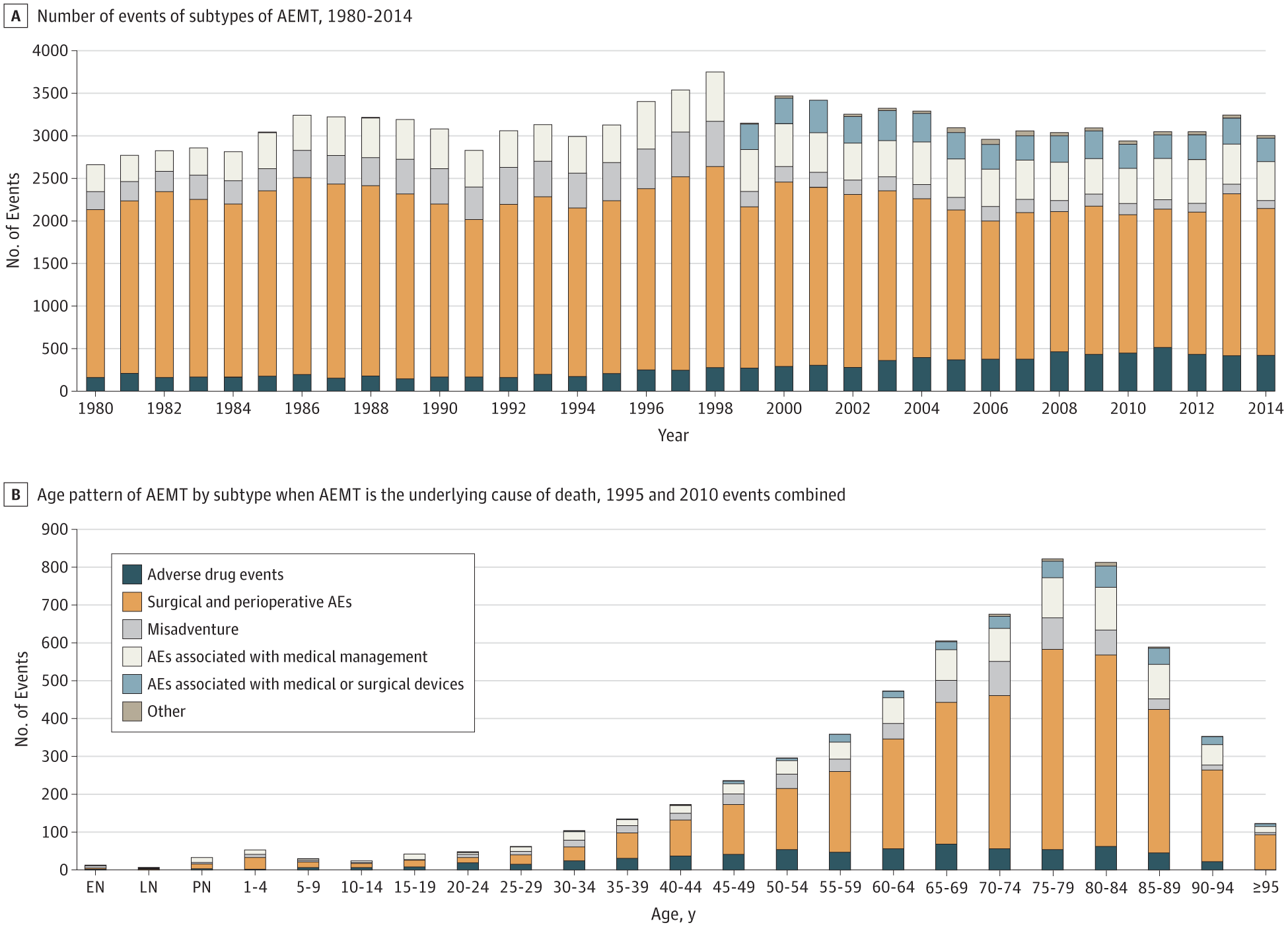

Adverse effects of medical treatment (AEMT) were classified into six categories: (1) adverse drug events, (2) surgical and perioperative adverse events, (3) misadventure (events likely to represent medical error, such as accidental laceration or incorrect dosage), (4) adverse events associated with medical management, (5) adverse events associated with medical or surgical devices, and (6) other. The authors used a method known as cause-of-death ensemble modeling (CODEm), a standard analytic tool used in GBD cause-specific mortality analyses. This method was used to generate mortality rate and cause fraction (percentage of all-cause deaths due to a specific GBD cause) estimates for the years 1990 through 2016. Finally, the authors analyzed the cause-of-death chains for all deaths from 1980 to 2014 to determine how frequently AEMT was (1) anywhere within a death certificate’s cause-of-death chain (ie, not underlying cause) and (2) which other contributing causes were most frequently found in the causal chain when AEMT was certified as the underlying cause.

Let’s look at the author’s primary results. First, they found 123,603 deaths (95% UI, 100,856-163,814 deaths) in which AEMT was determined to be the underlying cause of death. I must admit that when I first read that, for some reason I had a brain fart in which I thought the authors were saying that they had found 123,603 deaths per year due to AEMT. (Too much IOM and Hopkins on the brain, I guess.) Actually, that was the total number for the entire period.

Here’s the rest of the primary findings of the study:

The absolute number of deaths in which AEMT was the underlying cause increased from 4180 (95% UI, 3087-4993) in 1990 to 5180 (95% UI, 4469-7436) in 2016. Most of this increase was due to population growth and aging, as demonstrated by a 21.4% decrease (95% UI, 1.3%-32.2%) in the national age-standardized AEMT mortality rate over the same period, from 1.46 (95% UI, 1.09-1.76) deaths per 100 000 population in 1990 to 1.15 (95% UI, 1.00-1.60) deaths per 100 000 population in 2016 (Figure 1A). When not exclusively measured as the underlying cause of death, AEMT appeared in the cause-of-death chain in 2.7% of all deaths from 1980 to 2014, which corresponds to AEMT being a contributing cause for an additional 20 deaths for each death when it is the underlying cause. Mortality associated with AEMT as either an underlying or contributing cause appeared in 2.8% of all deaths.

Let’s unpack this a minute. We’re looking at a number of deaths due to AEMT that’s 50- to nearly 80-fold smaller than the numbers in the Hopkins study. More than that, the number normalized to population is falling, having fallen 21% over 36 years.

So what’s the difference between this study and studies like the Hopkins study and the studies upon which the Hopkins study was based? First, it uses a database designed to estimate the prevalence of different causes of death, rather than for insurance billing. Second, it used rigorous methodology to identify deaths that were primarily due to AEMTs. One thing about this study that makes sense comes from its observation that AEMT is a contributing cause for 20 additional deaths for each death for which it is the underlying cause. For 5,180 deaths in the most recent year, that means 108,780 deaths had an AEMT as a contributing or primary cause that year, which is in line with the IOM estimates. It’s also in line with my assertions that one major issue with previous studies is that the unspoken underlying assumption behind them is that that if a patient had an AEMT during his hospital course it was the AEMT that killed him. As for the studies finding up to 400,000 deaths a year due to medical errors, they are, as Monty Python would say, right out.

Remember, too, that this is a study of all AEMTs, but the authors did try to estimate what proportion of these AEMTs were due to medical error, or, as they put it, “misadventure.” Take a look at this graph, Figure 3 from the paper:

First of all, notice how, not unexpectedly, AEMTs increase with patient age. Older patients, of course, have more medical comorbidities and tend to be more medically fragile, with less room for things to go wrong. Second of all, notice that for all age ranges save one, how small a fraction of the total AEMTs were deemed to have been due to misadventure representing probable medical error. As the authors put it:

In the secondary analysis, in which AEMT was listed as the underlying cause of death, 8.9% were due to adverse drug events, 63.6% to surgical and perioperative adverse events, 8.5% to misadventure, 14% to adverse events associated with medical management, 4.5% to adverse events associated with medical or surgical devices, and 0.5% to other AEMT (eTable 6 in the Supplement). The ranking of the subtypes was stable over time (Figure 3A) but with increasing rates of adverse drug events and decreasing rates of misadventure and surgical and perioperative adverse events. Adverse events related to medical or surgical devices and other AEMT were nearly absent in the 1990s but have been responsible for a stable proportion of overall AEMT since the switch to ICD-10 coding of death certificates. Surgical and perioperative adverse events were the most common subtype of AEMT in almost all age groups and increased in importance with age (Figure 3B); misadventure was the largest subtype in neonates, and adverse drug events predominated in individuals aged 20 to 24 years.

So what we can say from these data are that (1) AEMTs are not uncommon; (2) the vast majority of AEMTs that occur in patients who die aren’t the primary cause of death; (3) only a relatively small fraction of AEMTs are due to misadventure or medical error; and (4) population-adjusted AEMT rates have been slowly decreasing. The study is not bulletproof, of course. No study is. For instance, the GBD approach uses ICD-coded death certificates, which have shown varying degrees of reliability in identifying medical harm. In addition, it is probable that a significant number of deaths involving AEMT are not captured because of incomplete reporting. There are also issues with GBD methodology that might not accurately capture every AEMT:

…the GBD study’s cause classification system that assigns each death to only a single underlying cause means that some events associated with AEMT may be grouped elsewhere. Such groupings are dependent on which ICD code was assigned as the underlying cause. For example, adverse drug events from prescribed opioids leading to death would likely be assigned to the GBD study’s cause of “opioid abuse” (ICD-10 code, F11) or “accidental poisoning” (ICD-10 code, T40) based on the mechanism of death, whereas they are included with medical harm in many other studies based on the association with a prescription. Somewhat analogously, nosocomial infections (ICD-10 code, Y95) are often coassigned with a pathogen or type of infection when responsible for a death, and, because Y95 does not end up as the single underlying cause on such death certificates, they are not classified in the GBD study as AEMT.

So let’s say that this study’s estimates of how many people die from AEMTs and, in particular, from medical misadventure, are better estimates than the “third leading cause of death” studies. (I happen to think that it is, even if it might have somewhat underestimated AEMTs.) Does that mean there’s no problem? Of course not, one death from medical error is too many. Roughly 5,200 deaths a year from AEMT and 108,000 deaths in which an AEMT was contributory are too many. However, we do no one other than quacks any favors by grossly exaggerating the scope of the problem, and several lines of evidence show that deaths due to AEMTs are decreasing modestly, not skyrocketing, as the “death by medicine” crowd would have you believe. We can do better. We should do better. We won’t do better by spreading myths that medical errors are the third leading cause of death.